How to use ChatGPT to answer the queries from structured tabular data

Introduction

The objective of this article is to explore the potential of the OpenAI API in answering queries by extracting knowledge from tabular data. As an example, let’s consider the question “Who is the highest salaried employee in our company?” This question can be effectively answered using the OpenAI API. However, in order to achieve this, we need to provide OpenAI with the necessary knowledge about our employee details. There are multiple approaches to sharing our own knowledge database with OpenAI. Commonly used methods include fine-tuning the model with our specific knowledge base and providing relevant context within the prompt. By employing these techniques, we can leverage the power of ChatGPT to obtain accurate and informative responses to queries related to our tabular data.

Method 1: Fine-tuning the Model with Your Own Dataset

Fine-tuning the LLM model with our own dataset allows OpenAI to provide answers tailored to our specific situations. However, this process can be resource-intensive and time-consuming. It is important to note that fine-tuning does not limit OpenAI to only our knowledge, which means it may generate responses that go beyond the information we have provided. This can result in what we call “hallucinations” or outputs that are not entirely accurate or reliable.

Method 2: Providing Additional Context to the Prompt

In this approach, we can guide OpenAI on how to answer a question by providing specific instructions. For example, we can ask OpenAI, “Who is born in the month of July? Answer this question based on the following list: ‘abc’ born in January, ‘xyz’ born in July, ‘123’ born in May.”

In this example, our input prompt includes additional context on how the question should be answered. We instruct OpenAI to limit its knowledge base to the provided list.

Similarly, we can include tabular data as context in the OpenAI prompt, allowing it to answer questions based on that input.

However, it’s important to note that passing the entire tabular data as context may not be feasible. OpenAI has limitations on the number of input tokens, and there may be associated costs. To overcome this, we can extract the relevant information from our tabular data and provide it as input context to OpenAI.

Workflow for the Proposed Approach

- Send a question to OpenAI along with the table information and receive the SQL statement.

- Execute the SQL statement in the database.

- Include the result set from the database as context along with the original question in the OpenAI prompt.

- Instructing OpenAI to answer the question solely based on the result set.

Implementation Reference

- Set up a SQLite database for storing employee information.

import sqlite3

conn = sqlite3.connect("employees.db")

cursor = conn.cursor()

cursor.execute("""

CREATE TABLE IF NOT EXISTS employees (

empid TEXT,

empname TEXT,

salary INTEGER,

dob TEXT,

department TEXT,

date_of_join TEXT,

city TEXT

)

""")

conn.commit()

conn.close()

- Generate a CSV file containing employee data and import it into SQLite.

import sqlite3

import pandas as pd

df=pd.read_csv("employee.csv")

conn = sqlite3.connect('employees.db')

df.to_sql('employees', conn, if_exists='replace', index=False)

conn.close()

- Execute the code to make OpenAI API calls and retrieve the results. Please ensure to include your OpenAI API key in the code.

import sqlite3

import openai

import json

text = input('Hi, This is a employee manager assitant, please ask me if you have any quries about employees \n')

conn = sqlite3.connect('employees.db')

cursor = conn.cursor()

# Add your OpenAI key

openai.api_key = ''

def get_result_prompt(cursor,question,sql_query):

column_names = [description[0] for description in cursor.description]

values = cursor.fetchall()

prompt = """I want you to answer the input question based on the table columns,input SQL query and it's result, if the input result is empty then return the output as 'No data found', You should not tell about SQL queries and tables in the result, If something wrong then return 'Try it in another way'. table columns are: {}, input SQL is: {}, input result is :{} and the input question is: {}. The output should be in natural language text.""".format(column_names,sql_query,values,question)

return prompt

def get_table_columns(table_name):

cursor.execute("PRAGMA table_info({})".format(table_name))

columns = cursor.fetchall()

#print(columns)

return [column[1] for column in columns]

def generate_sql_query(table_name,text,columns):

prompt = """You are a ChatGPT language model that can generate SQLite queries. Please provide a natural language input text, and I will generate the corresponding SQL query for you.The table name is {} and corresponding columns are {}.\nInput: {}\nSQL Query:""".format(table_name,columns,text)

request = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0301",

messages=[

{"role": "user", "content": prompt},

]

)

sql_query = request['choices'][0]['message']['content']

return sql_query

def execute_sql_query(query):

cursor.execute(query)

return cursor

table_name = 'employees'

columns = get_table_columns(table_name)

sql_query=generate_sql_query(table_name,text,columns)

#print("Generated SQL query: ",sql_query)

if sql_query:

result=execute_sql_query(sql_query)

#print("ChatGPT Response=>",result)

result_prompt=get_result_prompt(result,text,sql_query)

#print(result_prompt)

request = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0301",

messages=[

{"role": "user", "content":result_prompt},

]

)

answer = request['choices'][0]['message']['content']

print("Answer: ",answer)

# Close database connection

cursor.close()

conn.close()

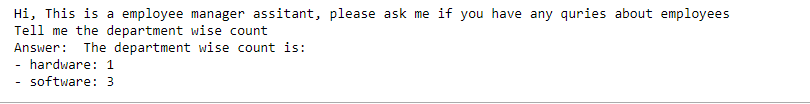

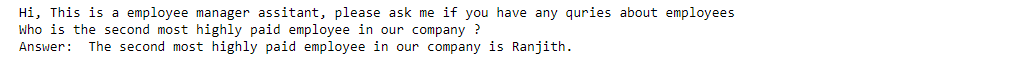

- Sample result